1. Introduction

Imagine you’re watching your favorite show on Netflix. As the next episode auto-plays, you might not realize that behind the scenes, there’s a complex backend system working tirelessly to serve you a seamless experience. Now, take a moment to think about what makes these systems not only functional but also smart. That’s where machine learning (ML) enters the picture—a transformative technology that breathes life into backend development, turning it into something dynamic and innovative.

Imagine you’re watching your favorite show on Netflix. As the next episode auto-plays, you might not realize that behind the scenes, there’s a complex backend system working tirelessly to serve you a seamless experience. Now, take a moment to think about what makes these systems not only functional but also smart. That’s where machine learning (ML) enters the picture—a transformative technology that breathes life into backend development, turning it into something dynamic and innovative.

Backend systems are like the unsung heroes of technology. They don’t get the spotlight but handle the heavy lifting. Whether it’s a payment gateway processing millions of transactions per second or a social media platform keeping billions connected, backends form the backbone of our digital world. But here’s the thing: traditional backends can only do so much. With increasing complexity and the need for smarter solutions, ML has become a necessity.

So, why should you care about ML in backend development? Great question. Let’s explore how this game-changing technology is reshaping the way backends are designed, deployed, and maintained.

a. A World Without Machine Learning

Imagine driving a car with no GPS, cruise control, or smart features. Sure, you’ll get to your destination, but the journey will be riddled with inefficiencies. That’s what backend systems were like before ML—a lot of manual effort, trial-and-error optimizations, and reactive problem-solving.

Now, imagine a car that adapts to traffic conditions, predicts fuel needs, and even suggests alternate routes. That’s the leap ML provides to backends. It automates, predicts, and personalizes, making systems not just functional but intuitive.

Fun Fact: Did you know that Amazon’s recommendation engine contributes to 35% of its revenue? This wouldn’t be possible without ML-powered backends analyzing user data in real time.

b. Why Machine Learning is a Big Deal

- Anticipating Needs:

Ever wondered how Google Maps predicts traffic or Spotify curates playlists? These aren’t lucky guesses. ML models analyze patterns and behaviors, anticipating what users will need next. - Handling Complexity:

Modern systems handle billions of requests per day. Managing this scale manually is a nightmare. ML automates resource allocation, error detection, and even user authentication, making these systems smarter and faster. - Personalization at Scale:

Today’s users demand personalized experiences. Whether it’s Netflix suggesting what to watch next or e-commerce platforms showcasing items you’re likely to buy, ML ensures these experiences are tailored, even for millions of users simultaneously.

c. From Reactive to Proactive Systems

Traditional backends are reactive. They wait for something to break, then fix it. Machine learning flips this script. It enables proactive systems that predict issues before they occur. For instance:

- An e-commerce platform can predict server load spikes during sales events and allocate resources in advance.

- A banking system can identify unusual transactions and flag potential fraud instantly.

This proactive approach not only saves time and money but also enhances user trust and satisfaction.

Pro Tip: Incorporating even a basic anomaly detection algorithm can significantly improve backend reliability.

d. Real-World Transformations

ML isn’t just a theoretical concept; it’s revolutionizing industries:

- Healthcare: Backend ML systems analyze patient records to predict disease risks and recommend treatments.

- Retail: Dynamic pricing and stock optimization are driven by backend ML algorithms.

- Entertainment: Platforms like YouTube use backend ML to analyze watch times, generating personalized recommendations.

e. A Glimpse into the Future

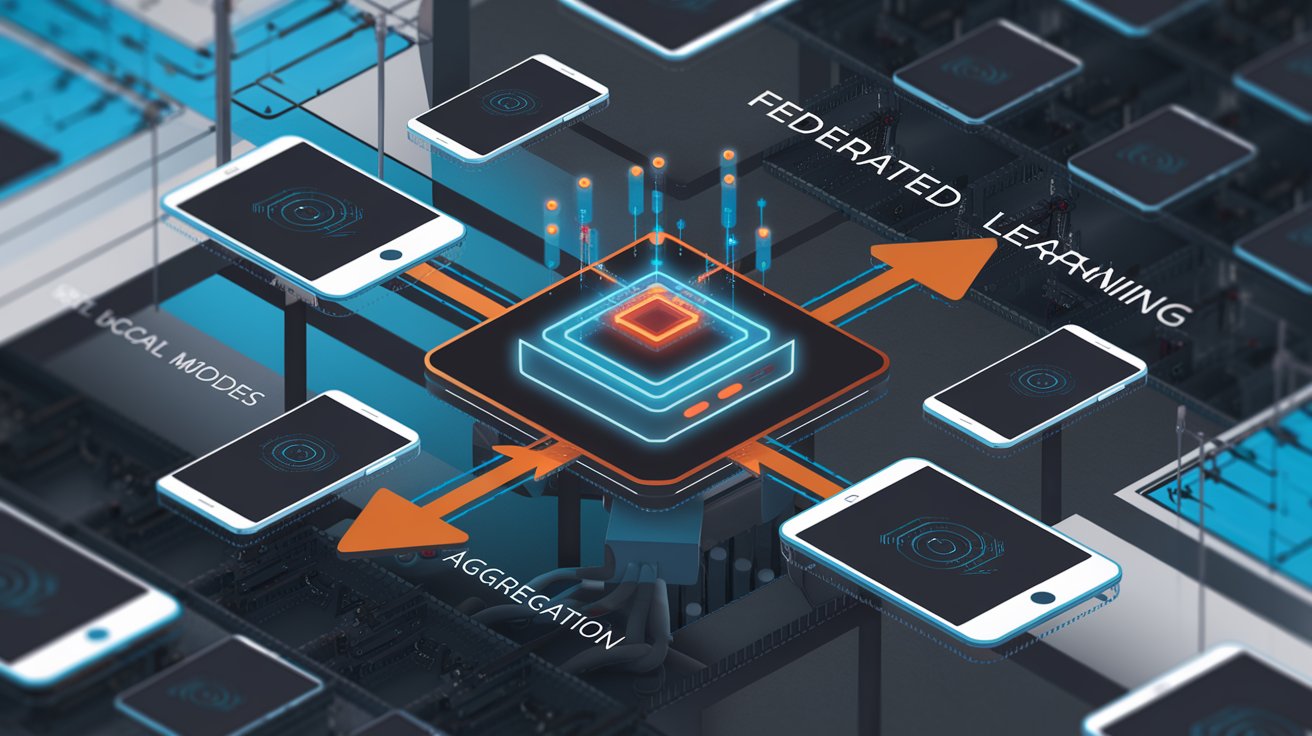

The integration of ML into backend systems is only the beginning. With advancements in quantum computing, federated learning, and artificial intelligence ethics, we’re looking at a future where backends not only respond to user needs but also align with global trends and values.

Thought-Provoking Idea: What if your smart home’s backend could anticipate your mood based on your activity patterns and adjust lighting or music accordingly? This isn’t far-fetched; it’s the next step in backend innovation.

f. Why This Blog is Your Guide

By the time you finish this blog, you’ll have a solid understanding of 15 innovative ML techniques that are reshaping backend development. But it’s not just about knowing the concepts—it’s about understanding how to apply them effectively, even if you’re not a data scientist.

And here’s the kicker: You don’t need a PhD to grasp these ideas. We’ll explain everything in a conversational tone, sprinkled with humor and relatable examples. Because learning should be fun, right?

g. Call to Adventure

Ready to dive into the fascinating world of ML-powered backend systems? Let’s get started with an engaging exploration of these techniques, how they’re applied, and why they’re changing the game. Trust me, by the end, you’ll be looking at backend development in an entirely new light.

Fun Fact to Wrap Up: Did you know the term “machine learning” was coined in 1959 by Arthur Samuel, a computer scientist who taught a computer to play checkers? It was groundbreaking then, and it’s reshaping the world now.

2. The Importance of Machine Learning in Backend Development

If we were to think of backend systems as the invisible gears of the digital world, then machine learning (ML) would be the oil that keeps those gears running smoothly. It’s not just a cool buzzword you hear about in tech conferences; ML has become an integral part of modern backend development, helping businesses stay ahead of the curve, optimize their processes, and deliver an enhanced user experience. So, why is ML so important in backend development? Let’s dive in and explore how it’s changing the game.

If we were to think of backend systems as the invisible gears of the digital world, then machine learning (ML) would be the oil that keeps those gears running smoothly. It’s not just a cool buzzword you hear about in tech conferences; ML has become an integral part of modern backend development, helping businesses stay ahead of the curve, optimize their processes, and deliver an enhanced user experience. So, why is ML so important in backend development? Let’s dive in and explore how it’s changing the game.

a. From Basic to Brainy: The Evolution of Backend Systems

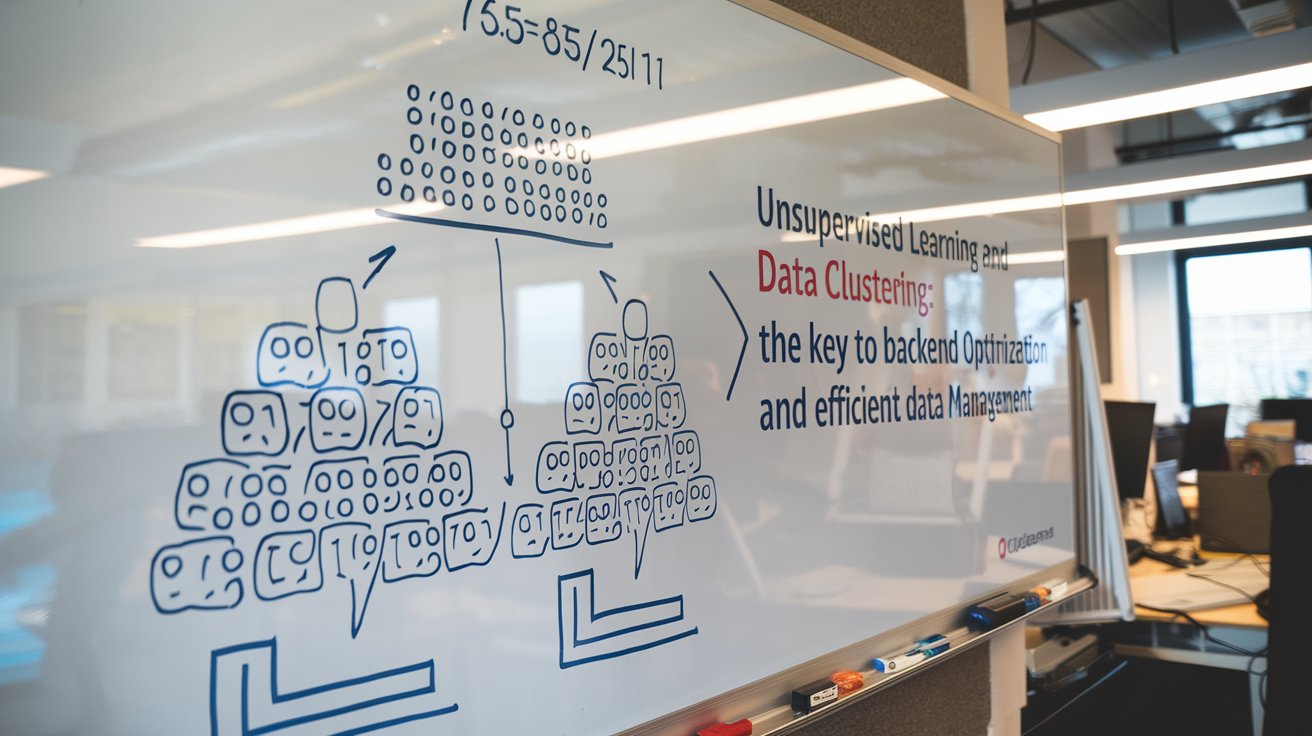

In the early days of backend development, systems were fairly simple. Databases stored information, servers delivered requests, and business logic was straightforward. However, as the digital world grew, so did the need for smarter, more efficient systems. Manual optimization couldn’t keep up with the ever-increasing data flow, security concerns, and the demand for personalized experiences. That’s when ML stepped in.

Before ML, backend systems were reactive. They only responded to problems after they arose—like a firefighter putting out a fire. But ML is proactive. It doesn’t wait for problems to show up. Instead, it uses data to predict what will happen next and takes preventive action. This shift from reactive to proactive is why ML is such a powerful tool in backend development.

Fun Fact: Did you know that Google’s backend uses machine learning to predict search queries? It doesn’t just look at what you’ve searched for; it anticipates what you might search based on trends, location, and even the weather!

b. How ML Makes Backend Systems Smarter

- Predicting Resource Needs:

Think of ML as a backstage manager who knows exactly how many actors, props, and lights are needed for a show before the curtain even goes up. In backend development, this means predicting traffic spikes, resource shortages, and even potential server failures. With ML, your system doesn’t just react to requests but anticipates them. For example, if your website experiences more traffic during certain times of the year, ML models can forecast this and scale resources accordingly. No more crashing websites or slow loading times! - Automating Routine Tasks:

We all know how repetitive tasks can drain productivity, and in backend development, there’s no shortage of them. But ML can automate many of these processes. Whether it’s data cleansing, sorting through logs to find anomalies, or managing user sessions, ML can handle these tasks faster and more efficiently than any human ever could. Think of it as your trusty assistant who’s always on the ball, never needing a break. - Improving Data Management:

Backend systems are constantly dealing with vast amounts of data. ML helps make sense of this data by automating data classification, error detection, and even recommending optimizations. If your system receives millions of requests per minute, how do you know which ones are valid and which ones are potentially harmful? ML can flag unusual patterns and prevent security threats, allowing backend developers to focus on what truly matters. - Personalization at Scale:

One of the most powerful aspects of ML is its ability to deliver personalized experiences. Whether it’s recommending the next movie on Netflix, products on Amazon, or songs on Spotify, ML takes into account a user’s history, preferences, and behavior to provide recommendations. In backend development, this means ML can tailor the user experience for each individual without the need for constant manual adjustments. It’s like having a personal assistant for each user, always making sure they get the most relevant content.

c. Enhancing Security and User Experience

In a world where cybersecurity threats are on the rise, backend systems need to be as secure as possible. Here’s where ML really shows its muscle. Instead of relying on static firewalls and predefined rules, ML can help systems learn to detect new types of attacks in real-time.

For instance, ML models can analyze login attempts, detect patterns, and flag suspicious activity before it becomes a serious issue. Imagine your backend system noticing an unusual login attempt from an unfamiliar location at 3 a.m. ML algorithms can immediately trigger security measures—like multi-factor authentication or account suspension—without waiting for a human to intervene. This not only keeps data safe but also provides users with peace of mind.

Additionally, ML helps enhance the overall user experience by anticipating what users want and need. Instead of bombarding them with irrelevant information, ML can ensure that users see what they care about most, based on past interactions. This results in a more seamless, engaging experience, leading to higher user satisfaction and retention.

d. Scalability and Flexibility: The Backbone of Growing Systems

As businesses grow, so do their backend systems. What worked for 100 users won’t necessarily work for 1 million. Scaling backend infrastructure used to be a nightmare, requiring manual intervention and constant adjustments. But ML changes all of that by automating scaling decisions.

Imagine an e-commerce platform running a flash sale. Without ML, you’d have to anticipate how many servers to bring online, manually configure them, and hope for the best. With ML, the system can learn from previous sales, predict the traffic load, and automatically adjust resources in real-time, ensuring the site runs smoothly regardless of demand. This not only saves time but ensures your users don’t experience frustrating delays or downtime.

e. Real-Time Data Processing: The Competitive Edge

In today’s fast-paced world, data doesn’t wait. It’s generated every second, and businesses need to process it quickly to make informed decisions. This is where ML comes in. By analyzing data in real-time, ML models can provide instant insights, detect anomalies, and trigger actions based on live data.

For example, imagine a backend system for a stock trading platform. Real-time data is crucial, and ML can analyze price fluctuations, news events, and even social media sentiment to predict market movements. This gives traders a competitive edge, helping them make decisions faster than ever before.

f. Wrapping It Up: Why ML is Here to Stay

Machine learning has quickly moved from a niche technology to a cornerstone of modern backend development. It enables systems to predict, learn, and adapt, making them faster, smarter, and more reliable. From automating routine tasks to improving security, personalization, and scalability, ML is helping backend developers create systems that not only meet user expectations but exceed them.

Whether you’re developing a mobile app, an e-commerce platform, or a social network, ML can enhance your backend in ways you may never have imagined. And as this technology continues to evolve, the possibilities are endless. One thing is certain—machine learning is here to stay, and its impact on backend development will only grow in the coming years.

Pro Tip: Start small with ML integration. Even a simple anomaly detection system can improve backend performance and security significantly.

3. 15 Innovative Machine Learning Techniques for Backend Development

a. Predictive Analytics for Resource Management

If there’s one thing that every backend developer dreads, it’s unexpected traffic spikes or resource shortages that slow down systems and frustrate users. Imagine launching a new product, only to have your site crash because you didn’t anticipate the sheer volume of visitors. Sounds like a nightmare, right? Well, predictive analytics, powered by machine learning (ML), is here to save the day.

If there’s one thing that every backend developer dreads, it’s unexpected traffic spikes or resource shortages that slow down systems and frustrate users. Imagine launching a new product, only to have your site crash because you didn’t anticipate the sheer volume of visitors. Sounds like a nightmare, right? Well, predictive analytics, powered by machine learning (ML), is here to save the day.

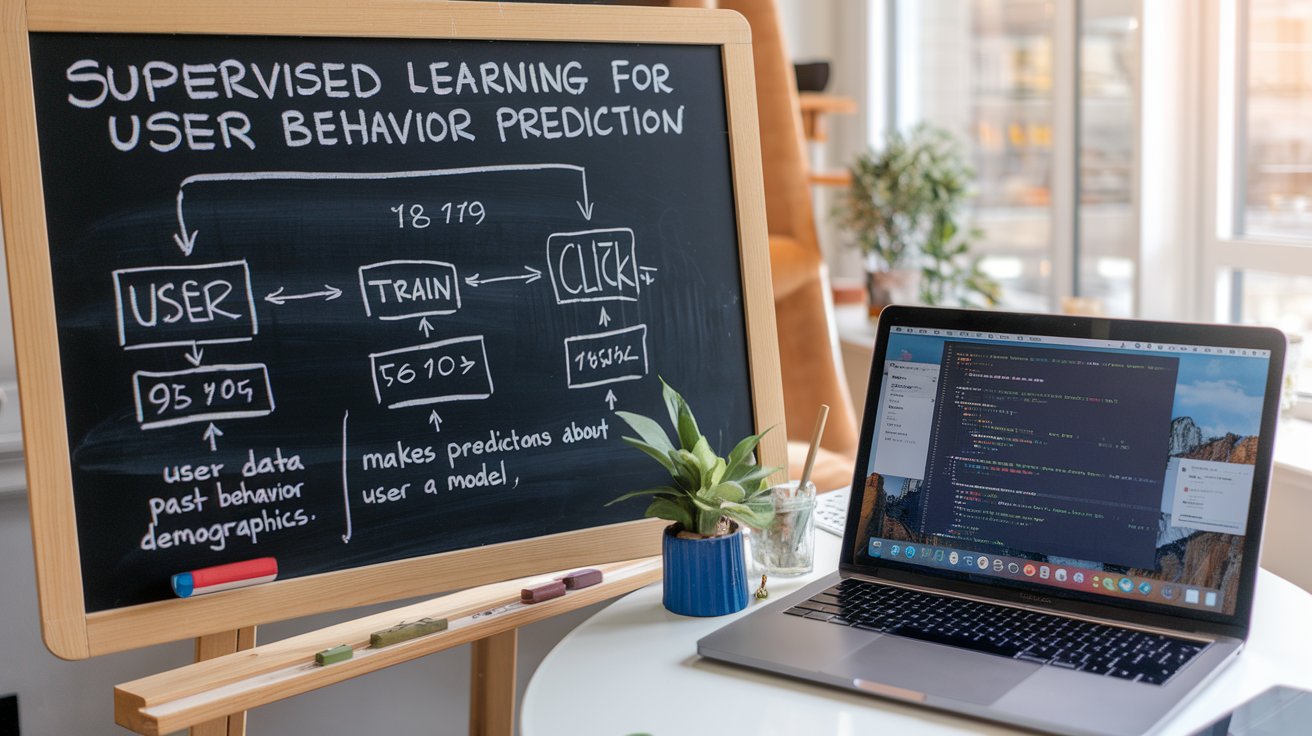

Predictive analytics uses historical data, statistical algorithms, and machine learning techniques to forecast future outcomes. In backend development, this means predicting system needs, user behavior, and resource usage before it becomes a problem. So, how does it work, and why is it so crucial for resource management? Let’s dive into this game-changing technique.

i. The Power of Prediction: How It Works

In simple terms, predictive analytics is like having a crystal ball for your backend system. It doesn’t just rely on past data; it analyzes patterns and trends to forecast future events. For instance, if you’re running an online store, predictive analytics can look at data from previous years to estimate the traffic spike during Black Friday or Cyber Monday. It will predict how many users are likely to visit the site, what products they might buy, and even the load on your servers at peak times. This allows you to allocate resources (like bandwidth, CPU, and memory) ahead of time to ensure a smooth user experience, without any lag or crashes.

ii. Forecasting Resource Needs

In backend systems, resource management is a fine art. You need enough servers to handle your site’s traffic, but not too many that you’re paying for unnecessary infrastructure. Traditional resource management often involves manual scaling based on experience, intuition, or guesswork. But this can lead to over-provisioning (wasting resources) or under-provisioning (leading to downtime). Predictive analytics removes the guesswork and gives you data-driven insights into exactly how many resources you’ll need.

How It Works:

- Data Collection: Predictive analytics starts by gathering historical data—everything from system resource usage (like CPU and RAM) to traffic spikes, user behavior, and seasonal patterns.

- Model Training: ML models are trained on this data to detect patterns and correlations. For example, if your website experiences a spike in traffic every weekend, the model will learn this trend.

- Prediction: Based on these patterns, the model can predict future demands, such as when the next spike will occur, how much traffic will come in, and how much server capacity will be needed.

Pro Tip: You can even use predictive analytics for proactive performance monitoring. If your server’s load is trending towards a threshold, the system can automatically adjust resources without you lifting a finger!

iii. Real-World Applications: How It’s Used in Backend Systems

- E-Commerce Platforms:

For online stores, predicting when and where customers will flock to the site can make all the difference. Predictive analytics can estimate sales spikes during holidays, special events, or product launches. For example, an e-commerce website might experience a massive surge in traffic when a popular product goes on sale. By forecasting this spike, the backend system can scale resources (like additional servers or cloud infrastructure) automatically, ensuring that the website remains operational and responsive. Without predictive analytics, the site could crash under the heavy load, costing the business sales and damaging its reputation. - Streaming Services:

Streaming platforms like Netflix, Hulu, and Spotify experience fluctuating demand based on factors such as the release of new content or the time of day. With predictive analytics, these services can forecast user demand in real-time, ensuring that they have enough bandwidth and server power to deliver uninterrupted streaming experiences. This is especially important during peak times, like when a new season of a popular show drops. By using predictive analytics, streaming services can allocate server resources more efficiently, avoiding slow buffering times and keeping users happy. - Social Media Platforms:

Social media platforms such as Facebook or Instagram rely heavily on real-time data. Predictive analytics allows these platforms to anticipate server loads based on events like viral posts, live streams, or sudden news events. Imagine a huge spike in traffic when a celebrity posts something trending. Predictive analytics helps the platform allocate resources accordingly, ensuring seamless user experience and preventing downtime during high-traffic periods.

iv. How It Improves Efficiency and Cost-Effectiveness

One of the most significant benefits of predictive analytics for resource management is the ability to increase efficiency and reduce costs. With traditional systems, you may have to over-provision your resources to account for worst-case scenarios. But with predictive analytics, you can more accurately predict demand, meaning you only need to scale your resources when necessary.

Cost-Effectiveness Example:

Let’s say you’re running a cloud-based service that offers file storage. By using predictive analytics, you can predict when usage will be low, allowing you to scale down your resources and save money. Alternatively, when usage spikes, the system can automatically scale up resources to accommodate the increase in demand. Without predictive analytics, you might have to keep a larger number of servers running at all times to be on the safe side—leading to higher costs.

v. Enhancing User Experience

Imagine you’re visiting a website, and suddenly, it takes forever to load. Frustrating, right? Predictive analytics can help prevent this by ensuring that backend resources are always available when needed. By predicting future traffic and scaling resources accordingly, predictive analytics helps ensure smooth, responsive websites.

Additionally, predictive analytics can anticipate user needs by analyzing browsing patterns. For example, if a user regularly visits certain pages or makes specific types of purchases, the backend system can preload relevant data, making the experience faster and more personalized.

vi. Predicting Infrastructure Failures

One of the most impressive uses of predictive analytics in backend systems is its ability to predict hardware failures. Systems can monitor server health and predict when a failure might occur based on historical performance data. If predictive analytics detects an impending failure, the system can either alert the developer or automatically switch to a backup system, preventing downtime and loss of service. This proactive approach reduces the need for costly emergency fixes and downtime.

vii. Future-Proofing Your Backend with Predictive Analytics

As businesses grow and data volumes increase, it’s no longer enough to react to issues as they arise. Predictive analytics equips backend systems with the foresight to stay ahead of the curve. It helps backend developers make data-driven decisions, ensuring that their systems can handle future challenges with ease. As the complexity of backend systems grows, predictive analytics will become even more essential for maintaining optimal performance.

viii. Wrapping It Up: Why Predictive Analytics Is a Must-Have for Backend Development

Predictive analytics is no longer a luxury for backend development—it’s a necessity. By forecasting traffic spikes, resource needs, and potential failures, it helps ensure that systems run efficiently and cost-effectively. Whether it’s improving user experience, anticipating infrastructure failures, or reducing costs, predictive analytics makes backend systems smarter, faster, and more reliable.

Machine learning-powered predictive analytics is transforming how we manage backend systems, providing the foresight to proactively address issues before they escalate. As data grows and technology advances, predictive analytics will continue to be an indispensable tool for backend developers and businesses alike.

b. Anomaly Detection in System Logs

When you’re building or managing a backend system, you’re constantly dealing with massive amounts of data. Servers churn through logs faster than you can blink, and these logs are invaluable for keeping your system running smoothly. They tell you what’s happening behind the scenes—tracking errors, server performance, and system activities. But what happens when something unusual occurs in these logs? What if an anomaly—a sudden spike in traffic, a weird request, or an error that wasn’t part of the plan—appears? You may not have the time or resources to sift through these logs manually. And that’s where machine learning-powered anomaly detection steps in.

When you’re building or managing a backend system, you’re constantly dealing with massive amounts of data. Servers churn through logs faster than you can blink, and these logs are invaluable for keeping your system running smoothly. They tell you what’s happening behind the scenes—tracking errors, server performance, and system activities. But what happens when something unusual occurs in these logs? What if an anomaly—a sudden spike in traffic, a weird request, or an error that wasn’t part of the plan—appears? You may not have the time or resources to sift through these logs manually. And that’s where machine learning-powered anomaly detection steps in.

Anomaly detection is like having a digital detective watching your system 24/7, looking for anything that doesn’t belong. It analyzes your logs in real time, automatically identifying outliers or unexpected patterns that could signal problems like security breaches, bugs, or inefficiencies. Think of it as your personal watchdog, tirelessly scanning for anything unusual, so you don’t have to. Let’s break down why anomaly detection is so crucial in backend development and how it can help save time, resources, and—most importantly—your sanity.

i. What is Anomaly Detection in System Logs?

At its core, anomaly detection involves using machine learning algorithms to identify patterns in data and then flagging any data points that deviate from these patterns. When applied to system logs, anomaly detection algorithms can spot outliers, errors, or unexpected events that could indicate potential issues in your backend system.

For instance, let’s say your server is running fine most of the time, but suddenly, the log shows a massive spike in error messages or unusual user activity. This could be an indication of a problem, like a security vulnerability being exploited or a bug causing unexpected behavior. Instead of waiting for these issues to escalate, anomaly detection algorithms can catch them early and alert you to take action before the situation becomes critical.

ii. How Does Anomaly Detection Work in Backend Systems?

Anomaly detection relies on machine learning to understand what “normal” looks like for your backend system. Once the model is trained with historical data, it can spot the anomalies in real-time logs by comparing new data against the established norm.

- Data Collection: The first step in anomaly detection is collecting system logs. These logs can contain a wide variety of data, such as server requests, errors, API calls, and even user behavior. The more comprehensive the dataset, the better the model will perform.

- Model Training: Machine learning models are then trained on this data. This means the algorithm learns what “normal” activity looks like—such as typical request rates, usual error patterns, or expected server response times.

- Real-Time Monitoring: Once the model has been trained, it’s deployed to monitor incoming system logs in real time. As new logs come in, the algorithm checks for deviations from the learned patterns. If something unusual happens—like a sudden spike in errors or an unexpected user behavior pattern—the system flags this as an anomaly and sends an alert to the backend team.

Pro Tip: Some anomaly detection models use techniques like clustering or neural networks to identify outliers. Clustering groups similar data points together and flags anything that doesn’t fit the group as an anomaly. Neural networks, on the other hand, learn intricate patterns in data, allowing them to detect more complex anomalies.

iii. Types of Anomalies Detected in System Logs

Anomaly detection isn’t a one-size-fits-all solution. It can be customized to detect various types of anomalies, depending on what you’re looking for. Below are some common types of anomalies that are typically flagged in system logs:

- Statistical Anomalies: These anomalies occur when the data points are far from the mean or expected value. For example, a sudden spike in server error rates or an unexpected increase in database queries might be flagged as a statistical anomaly.

- Contextual Anomalies: Contextual anomalies arise when a certain event seems unusual within a specific context but might not be out of the ordinary when viewed over a longer period or in a different context. For example, a spike in traffic could be expected on a major holiday, but it might be an anomaly if it happens at a random time without any promotional events.

- Collective Anomalies: This type of anomaly occurs when multiple data points, when taken together, deviate from the norm. It could indicate a larger system-wide problem, like a DDoS attack or a performance bottleneck in your backend infrastructure.

iv. Benefits of Anomaly Detection in Backend Development

- Proactive Issue Resolution: With machine learning-powered anomaly detection, you’re not just reacting to problems as they arise—you’re staying ahead of the curve. Early detection of issues like system crashes, security breaches, or performance bottlenecks helps you address problems before they snowball into major incidents.

- Enhanced Security: In the world of backend systems, security is a constant concern. Anomaly detection is particularly useful for spotting abnormal patterns that could indicate a potential security threat. For instance, unusual login attempts or an unexpected surge in API requests might suggest a brute-force attack or other malicious activity. By flagging these anomalies early, you can protect your system from cyber threats.

- Improved System Efficiency: Anomalies often highlight inefficiencies or bugs that can affect system performance. For example, a sudden surge in memory usage might be a sign of a memory leak, and an unusual increase in database queries could point to an inefficient query or an issue in your application’s code. By detecting these anomalies early, you can optimize your backend system to run more efficiently.

- Reduced Downtime and Cost: Every minute your backend system is down or not performing optimally, it costs you. Anomaly detection helps reduce downtime by catching issues before they escalate into full-blown outages. Additionally, by identifying inefficiencies early on, you can save on resources and reduce the need for costly fixes.

v. How Anomaly Detection Improves Monitoring and Troubleshooting

Let’s face it—debugging a backend system can feel like finding a needle in a haystack. With so many logs coming in at once, pinpointing the root cause of an issue can take hours or even days. But anomaly detection changes the game.

By flagging unusual patterns as they appear, anomaly detection helps developers quickly identify the source of the problem. Rather than scrolling through countless lines of logs, you can focus on the anomalies flagged by the system and investigate those areas directly. This targeted approach makes monitoring and troubleshooting much more efficient, saving both time and resources.

vi. Real-World Examples of Anomaly Detection

- Security Breaches: Let’s say your system logs show a sudden surge in failed login attempts. Traditional methods might not catch this in time, but anomaly detection algorithms would immediately flag this as an anomaly. This could be an indication of a brute-force attack, and your system can react quickly to lock down the affected accounts or block the source of the attack.

- Database Performance Issues: Imagine that your backend team notices a slowdown in database queries. By using anomaly detection, the system might highlight a specific query that’s suddenly taking up much more time than usual. This allows the team to quickly address the issue before it causes a bigger performance bottleneck.

- Server Failures: Server failures often begin with subtle signs, such as increased error rates or high resource usage. With anomaly detection, these signs are flagged early, allowing backend teams to respond to potential issues before the server goes down.

vii. Conclusion: The Importance of Anomaly Detection in Backend Development

In the fast-paced world of backend development, staying ahead of potential issues is key to maintaining a healthy and efficient system. Anomaly detection offers a way to identify problems before they become critical, improving security, system performance, and overall user experience. With machine learning-powered algorithms keeping a constant watch on your system logs, you can spend less time reacting to issues and more time optimizing your backend for success.

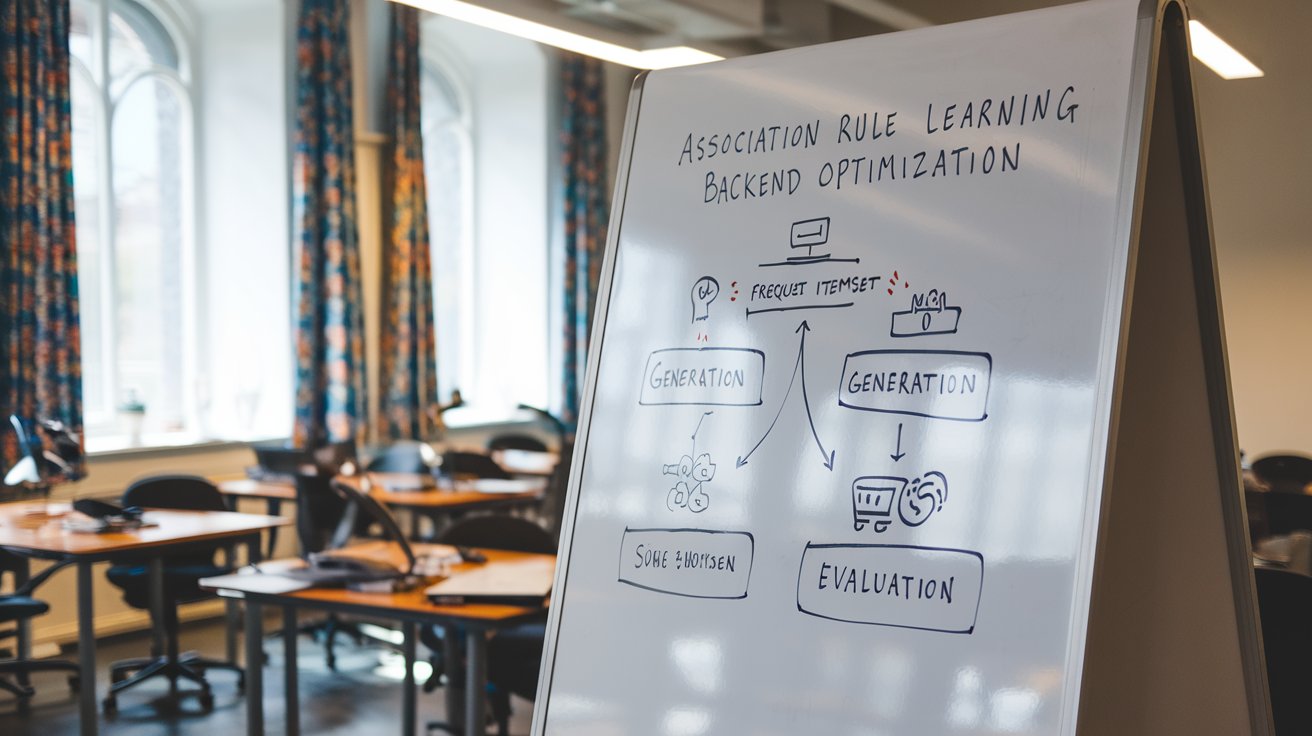

c. Recommendation Systems for API Design

Ah, the good ol’ recommendation system. We’ve all encountered them—whether it’s Netflix suggesting the next binge-worthy series, Amazon recommending that must-have gadget, or Spotify offering a curated playlist based on our mood. While these systems are all about giving users a more personalized experience, they also have a key role in backend development, especially when it comes to optimizing API design. If your API can’t provide fast, personalized recommendations, then what’s the point, right? So, let’s dive into how recommendation systems are revolutionizing API design, making it smarter, faster, and more in tune with what users want.

Ah, the good ol’ recommendation system. We’ve all encountered them—whether it’s Netflix suggesting the next binge-worthy series, Amazon recommending that must-have gadget, or Spotify offering a curated playlist based on our mood. While these systems are all about giving users a more personalized experience, they also have a key role in backend development, especially when it comes to optimizing API design. If your API can’t provide fast, personalized recommendations, then what’s the point, right? So, let’s dive into how recommendation systems are revolutionizing API design, making it smarter, faster, and more in tune with what users want.

I. What is a Recommendation System and Why Does it Matter?

At its core, a recommendation system is a machine learning-powered tool that predicts what a user might like based on their past behavior, preferences, and interactions. Imagine having a digital personal assistant that knows you so well, it can suggest the best products, movies, songs, or even news articles based on what you’ve previously enjoyed.

In backend development, recommendation systems play a crucial role in making APIs smarter. APIs that incorporate recommendation systems can provide users with a personalized experience, whether that’s suggesting products on an e-commerce platform or curating a list of articles on a news site. They analyze large amounts of data, like past user interactions, preferences, and behavior, and generate predictions or recommendations. The better the recommendation, the more engaged users will be.

But here’s the kicker: for recommendation systems to work effectively in backend development, they need to be incorporated into well-designed APIs. APIs are like the bridge that connects all the data to the front-end application—without a solid API, the recommendation system can’t do its magic.

II. How Recommendation Systems Work in Backend APIs

Now that we know what recommendation systems are, let’s explore how they actually work behind the scenes, especially in the context of APIs.

- Data Collection: For a recommendation system to be effective, it needs access to user data. This includes historical data like what products a user has viewed or purchased, what movies they’ve watched, or what music they’ve listened to. The more data it has, the more accurate the recommendations will be.

- Data Processing: Once the data is collected, the API will need to preprocess it. This is where the magic of machine learning kicks in—using algorithms like collaborative filtering, content-based filtering, or hybrid models to identify patterns in the data. Collaborative filtering, for example, looks for users with similar preferences and recommends items that those users have liked. Content-based filtering, on the other hand, uses item features (like genre, author, or brand) to make suggestions based on what the user has liked before.

- Recommendation Generation: After the data has been processed, the recommendation system generates a list of suggestions. The backend API uses this output to send personalized content to the front end. The API then formats the data and ensures it’s delivered in a way that the client can easily display it to the user.

- Real-Time Updates: One of the key aspects of modern recommendation systems is their ability to make real-time recommendations. As users interact with the API, their preferences change, and the system adapts. Real-time data allows the recommendation engine to offer fresh, relevant suggestions based on current behavior, not just historical data.

III. Types of Recommendation Systems

Recommendation systems come in various flavors, each with its own unique approach. Understanding the different types can help backend developers choose the right one for their API design.

- Collaborative Filtering: This method makes recommendations based on the past behaviors of other users. The assumption is that users who have similar tastes will like the same items. For example, if user A likes products 1, 2, and 3, and user B likes 2, 3, and 4, the system will recommend product 4 to user A because they share similar preferences.

- Content-Based Filtering: In contrast to collaborative filtering, content-based filtering uses the features of the items themselves (like categories, tags, or descriptions). If you’ve watched a lot of action movies, the recommendation system will suggest more action movies based on those similar attributes.

- Hybrid Models: A hybrid recommendation system combines multiple approaches—collaborative filtering and content-based filtering, for example. This gives the system the flexibility to make more accurate predictions by leveraging the strengths of both methods.

- Knowledge-Based Systems: These systems are based on explicit user preferences. For instance, in a travel API, if a user selects “budget-friendly” and “beach destinations,” the system will prioritize those attributes while recommending hotels or vacation packages.

IV. Benefits of Incorporating Recommendation Systems into API Design

Now, let’s talk about why you should care about recommendation systems when designing an API. Here are some of the key benefits:

- Personalized User Experience: The biggest advantage of recommendation systems is that they offer personalized experiences for users. When a backend API suggests products, content, or services based on individual preferences, users feel more engaged and are more likely to continue using the service.

- Increased Engagement and Retention: Personalized recommendations can significantly increase user engagement. For example, if Netflix keeps suggesting shows you actually want to watch, you’re more likely to stick around. In the same way, personalized product recommendations on an e-commerce site encourage users to make more purchases, increasing customer retention.

- Improved Decision-Making: Recommendation systems help users make decisions faster. Instead of scrolling endlessly through thousands of items, users are shown what they’re most likely to enjoy, which makes the decision-making process quicker and less frustrating. In backend development, this means a more efficient API design that saves users time and effort.

- Increased Revenue: Personalized recommendations aren’t just great for users—they’re also fantastic for businesses. When customers are presented with products or services they’re more likely to buy, businesses see an uptick in revenue. It’s a win-win!

V. Challenges of Implementing Recommendation Systems in API Design

While the benefits of recommendation systems are clear, implementing them within an API design can be tricky. Here are some common challenges backend developers might face:

- Data Quality and Quantity: The more data the recommendation system has, the better the suggestions. However, not all data is created equal. If the data is incomplete, noisy, or biased, the recommendations might be inaccurate, leading to frustrated users. Collecting high-quality data is critical for a successful recommendation system.

- Cold Start Problem: New users or new items in the system can pose a problem. If there isn’t enough historical data to base recommendations on, the system can’t provide personalized suggestions. Solving the cold start problem requires creative approaches, such as using content-based filtering for new items or gathering more explicit data from users when they first sign up.

- Scalability: As your API grows and more users interact with your system, it’s crucial that your recommendation system can scale. A system that works well for a few hundred users might struggle to handle millions. Ensuring that your recommendation engine can scale is key to providing fast and accurate recommendations to all users.

VI. Real-World Examples of APIs with Recommendation Systems

Let’s look at a few real-world examples of how recommendation systems are integrated into APIs:

- E-commerce APIs: Companies like Amazon use recommendation systems to suggest products based on user behavior and preferences. Their APIs are designed to quickly provide these suggestions as users browse the site, leading to higher sales and a more personalized shopping experience.

- Music Streaming APIs: Spotify uses collaborative filtering to recommend songs based on the listening history of users. Their API delivers curated playlists and song recommendations, helping users discover new music that aligns with their tastes.

- Video Streaming APIs: Netflix is a classic example of a recommendation engine in action. By analyzing viewing history and preferences, their API can suggest movies and TV shows that are more likely to resonate with users.

d. Natural Language Processing (NLP) for Automation

If you’ve ever had a conversation with Siri or Alexa, or seen a chatbot answering customer queries online, you’ve encountered Natural Language Processing (NLP) in action. But NLP isn’t just for fancy voice assistants—it’s also a game-changer in backend development, especially when it comes to automation. Imagine an API that not only understands user input but also interprets and responds in ways that feel human. That’s the magic of NLP! Let’s dig into how this technology is transforming backend systems, enabling smarter automation, and making user interactions more efficient.

If you’ve ever had a conversation with Siri or Alexa, or seen a chatbot answering customer queries online, you’ve encountered Natural Language Processing (NLP) in action. But NLP isn’t just for fancy voice assistants—it’s also a game-changer in backend development, especially when it comes to automation. Imagine an API that not only understands user input but also interprets and responds in ways that feel human. That’s the magic of NLP! Let’s dig into how this technology is transforming backend systems, enabling smarter automation, and making user interactions more efficient.

I. What is Natural Language Processing (NLP)?

Before diving into how NLP fits into backend automation, let’s first understand what NLP is all about. NLP is a branch of artificial intelligence (AI) that enables machines to understand, interpret, and respond to human language in a meaningful way. It’s about breaking down human speech or text into data that a computer can analyze, process, and understand.

Think of it like this: whenever you talk to a voice assistant or type a query into Google, NLP is at work behind the scenes. It’s the technology that converts your words into something a computer can process, understand, and respond to. In backend systems, NLP plays a pivotal role in automating tasks that previously required human intervention.

For instance, NLP helps backends interpret unstructured data, such as customer emails, social media comments, and even voice inputs. Instead of relying on rigid commands, NLP-powered systems can handle natural conversations and text—making automation more flexible and efficient.

II. How NLP Powers Automation in Backend Development

NLP’s role in automation is huge, especially in backend systems. Here’s a breakdown of how NLP can turbocharge automation:

- Automating Customer Support with Chatbots:

Chatbots are probably the most common example of NLP in action. Instead of a human answering every single customer query, NLP allows chatbots to interpret and respond to customer inquiries automatically. The backend API integrates the chatbot with NLP algorithms to understand the intent behind user queries, interpret the data, and provide accurate responses without human intervention.Example: Imagine a customer texting a company’s customer service bot, saying, “I want to cancel my subscription.” NLP interprets the request, triggers the appropriate automation, and sends a cancellation confirmation without the need for a human agent.

- Text Classification for Automation:

Text classification is another area where NLP shines. It involves categorizing text data into predefined categories. In backend systems, this can be used to automate tasks like categorizing customer support tickets, sorting emails, or even analyzing social media posts. By automatically tagging and sorting content, NLP helps systems streamline processes, prioritize important tasks, and cut down manual workloads.Example: Imagine you’re a backend developer building an API for an e-commerce platform. With NLP, the system can automatically categorize customer feedback into groups like “product issue,” “shipping concern,” or “positive review,” allowing your system to route the issues to the correct department without human intervention.

- Sentiment Analysis for Decision Making:

Sentiment analysis is another powerful tool that NLP offers. It allows backend systems to automatically analyze the sentiment behind a piece of text—whether it’s positive, negative, or neutral. By analyzing customer reviews, social media posts, or support tickets, sentiment analysis can help businesses automate decision-making and prioritize tasks based on urgency.Example: Let’s say you’re running a backend system that handles customer feedback for a product. Using sentiment analysis, the system can automatically identify whether a review is positive or negative. If the review is negative, it might trigger an automated follow-up to resolve the issue, while positive reviews can be logged and stored for future use.

- Speech Recognition for Voice-Activated Automation:

Speech recognition, an NLP application, is increasingly popular in automation. Voice assistants like Google Assistant or Amazon Alexa use NLP to interpret spoken language, enabling users to control devices or get information through voice commands. On the backend, NLP converts voice inputs into text, and then the system processes this text to trigger automated actions.Example: Picture a backend system for a home automation device. When a user says, “Turn off the lights in the living room,” NLP interprets the command, the backend processes it, and the lights are turned off—all without human intervention.

III. Key Benefits of Using NLP for Automation in Backend Development

Now that we’ve explored how NLP powers automation, let’s talk about the benefits it brings to backend development.

- Enhanced User Experience:

The ability of NLP systems to understand natural language allows users to interact with machines more intuitively. Whether it’s chatting with a bot, sending a voice command, or asking a question in plain text, NLP ensures that interactions feel natural and efficient. By automating responses based on user input, backend systems become more user-friendly and reduce the need for human agents.Pro Tip: The more accurate your NLP system, the more seamless and engaging the user experience becomes. Users don’t want to feel like they’re talking to a robot—they want smooth, natural interactions!

- Faster and More Efficient Automation:

NLP-based automation can process a massive amount of data quickly, far beyond human capabilities. Instead of relying on manual intervention to categorize data, answer queries, or perform repetitive tasks, NLP can handle all of these processes automatically. This leads to faster turnaround times and greater efficiency in backend operations.Example: Instead of having customer support agents manually sorting through emails and tickets, an NLP-powered backend can automatically categorize and prioritize them. This cuts down on processing time and allows agents to focus on more complex issues.

- Cost Savings:

By automating tasks like customer service, data categorization, and sentiment analysis, businesses can reduce the need for human intervention in repetitive tasks. This translates to cost savings, as businesses don’t have to hire as many staff members to handle basic queries or data processing.Fun Fact: It’s estimated that businesses can save up to 30% in operational costs by automating tasks with NLP-powered systems!

- Scalability:

As businesses grow, the volume of interactions, customer queries, and content data increases. NLP systems allow backend processes to scale automatically without the need for hiring additional human resources. Whether it’s handling millions of customer inquiries or processing large amounts of text data, NLP can handle it all at scale.Example: An NLP-powered chatbot can seamlessly handle a surge of customer queries during a sale, while still providing timely responses to every customer.

IV. Real-World Applications of NLP in Backend Automation

Let’s take a look at some real-world examples of NLP being used for backend automation.

- E-Commerce Automation:

Many e-commerce platforms use NLP to automate product recommendations, customer service, and even product reviews. By understanding customer queries and automatically routing them to the appropriate service, these platforms can provide an efficient and personalized shopping experience. - Healthcare Automation:

NLP is increasingly used in healthcare systems to automate the processing of medical records, clinical notes, and patient feedback. This helps doctors and medical professionals streamline their workflows and focus more on patient care. - Customer Support Chatbots:

Many companies integrate NLP-powered chatbots into their customer support systems. These bots can answer a wide range of queries, provide troubleshooting steps, and escalate issues to human agents when needed. By automating routine customer interactions, businesses can save time and money while improving customer satisfaction.

V. Challenges of Using NLP in Backend Automation

Despite its advantages, implementing NLP for automation can come with some challenges:

- Language Complexity:

Human language is inherently complex and often ambiguous. NLP systems may struggle to understand slang, idiomatic expressions, or regional dialects, leading to misinterpretations. To improve accuracy, NLP models need to be trained on diverse data and continuously updated. - Data Privacy Concerns:

When using NLP to process sensitive data like customer queries or healthcare records, businesses need to ensure that data privacy is maintained. This can be particularly challenging when handling personal or confidential information. - Training Data Requirements:

For NLP to be effective, it requires large amounts of high-quality training data. Gathering this data can be time-consuming and expensive, and poor-quality data can lead to inaccurate results.

In the world of backend development, NLP is not just a buzzword—it’s a powerhouse that drives smarter automation, faster response times, and better user experiences. By incorporating NLP into backend systems, developers can build solutions that not only understand human language but also act upon it, reducing manual work and improving operational efficiency. So, if you’re looking to elevate your backend development game, it’s time to embrace NLP and let automation do the heavy lifting!

e. Reinforcement Learning for Dynamic Task Scheduling

When you think about how complex systems handle multiple tasks at the same time—whether it’s running a cloud service or managing a large e-commerce platform—you might wonder how these systems prioritize and schedule tasks efficiently. Well, that’s where Reinforcement Learning (RL) comes in! It’s like giving your backend system the ability to learn from experience and make intelligent decisions about task scheduling based on changing conditions.

When you think about how complex systems handle multiple tasks at the same time—whether it’s running a cloud service or managing a large e-commerce platform—you might wonder how these systems prioritize and schedule tasks efficiently. Well, that’s where Reinforcement Learning (RL) comes in! It’s like giving your backend system the ability to learn from experience and make intelligent decisions about task scheduling based on changing conditions.

In backend development, dynamic task scheduling is critical because systems often face the need to balance multiple tasks, deal with changing workloads, and ensure that resources are used efficiently. Reinforcement Learning provides a way to tackle these challenges by allowing systems to learn optimal scheduling strategies over time, just like how a self-driving car learns to drive better the more it experiences different driving conditions.

Let’s dive deeper into how RL is transforming backend task scheduling and how you can use it to supercharge your systems!

I. What is Reinforcement Learning (RL)?

Before jumping into the role of RL in dynamic task scheduling, let’s first break down what RL is. Reinforcement Learning is a type of machine learning where an agent (think of it as a virtual decision-maker) learns by interacting with an environment, receiving feedback (rewards or penalties), and improving its actions to achieve a goal.

In simpler terms, RL is like a video game. When you play a game, you try different strategies to score points (rewards) and avoid losing lives (penalties). The more you play, the better you get at the game because you learn from your mistakes and adjust your strategies.

In backend development, the “agent” is the system managing tasks, and the “environment” is the set of tasks and available resources. The system makes scheduling decisions, receives feedback (like whether a task was completed on time or resources were overused), and adjusts its strategy for future tasks.

II. How Reinforcement Learning Enhances Dynamic Task Scheduling

Task scheduling is a critical function in backend systems, especially when managing tasks like processing data requests, running background processes, or handling user interactions. Traditional scheduling techniques often rely on static rules or simple priority-based systems, but as tasks become more complex and resource demands fluctuate, these methods can lead to inefficiencies.

Here’s where RL steps in to make task scheduling smarter and more adaptive:

- Optimizing Resource Allocation:

In any backend system, there are limited resources (like CPU, memory, or network bandwidth) that need to be distributed across multiple tasks. RL helps systems figure out the best way to allocate resources to tasks based on past experiences. It learns to allocate resources dynamically, ensuring that tasks are completed on time without overwhelming the system.Example: Imagine a cloud service handling multiple customer requests. Using RL, the system can learn how to allocate bandwidth efficiently—prioritizing critical tasks (like payments or login requests) while ensuring that less important tasks (like generating reports) don’t consume too much bandwidth.

- Learning Optimal Task Sequences:

Some tasks depend on others. For example, in a data processing pipeline, one task might need to finish before another can start. RL can optimize the order of task execution to ensure that each task is performed at the right time, with the least amount of delay.Example: Consider a backend system processing customer orders. With RL, the system can learn the best sequence for fulfilling orders—starting with high-priority orders, then processing routine orders while keeping track of resource usage to avoid bottlenecks.

- Adapting to Changing Workloads:

One of the key benefits of RL is its ability to adapt. Unlike static scheduling methods, RL doesn’t just follow predefined rules—it continuously learns and adjusts based on changing workloads. Whether it’s handling a sudden spike in traffic or an unexpected server failure, RL allows the backend system to adapt and maintain performance without manual intervention.Example: During peak shopping seasons, like Black Friday, a backend system might experience a sudden spike in user traffic. With RL, the system can learn from past traffic patterns and adjust its scheduling strategies in real-time, ensuring that tasks like inventory updates and payment processing remain smooth, even under heavy load.

III. Benefits of Using Reinforcement Learning in Task Scheduling

Now that we understand how RL enhances task scheduling, let’s explore the key benefits it brings to backend systems.

- Efficiency and Resource Optimization:

One of the most significant advantages of RL is its ability to optimize resource usage. By continuously learning from its environment, an RL-powered backend system can ensure that resources are allocated in the most efficient way possible. This reduces waste and maximizes throughput.Pro Tip: The more data the RL system processes, the better it gets at predicting resource usage. Over time, it becomes an expert in task scheduling, reducing the chances of overloading the system.

- Scalability:

As backend systems scale and the number of tasks grows, managing them manually or with static rules becomes increasingly difficult. RL offers a scalable solution by automatically adjusting to changes in task volume and system capacity. This means that whether your system handles 100 tasks a day or 100,000, RL will adapt to ensure everything runs smoothly.Example: In a large-scale e-commerce platform, RL can dynamically schedule tasks such as payment processing, inventory updates, and order tracking based on real-time demand, ensuring the system can scale without crashing under heavy loads.

- Improved Task Completion Times:

RL helps to minimize delays by learning the optimal task sequences and resource allocation strategies. As a result, tasks are completed faster, improving overall system performance. This is especially important in systems where speed is critical, such as in real-time data processing or time-sensitive transactions.Fun Fact: Some companies have reported a 30-40% reduction in task completion times after integrating RL into their backend scheduling systems!

- Adaptability to Changing Conditions:

RL systems are highly adaptable. They learn from new experiences and adjust their strategies accordingly. If the system’s workload changes, or if there’s a sudden change in available resources (such as server downtime or a network failure), RL can quickly adapt to ensure tasks are still completed efficiently.Example: Let’s say a backend system handles an e-learning platform. If students are suddenly uploading videos or accessing resources in large numbers, the RL system can adapt to prioritize those requests and delay non-urgent tasks like updating content.

IV. Real-World Applications of Reinforcement Learning for Task Scheduling

Reinforcement Learning isn’t just a theoretical concept—it’s already being used in various industries to improve backend task scheduling. Here are some real-world examples:

- Cloud Computing:

In cloud services, dynamic task scheduling is crucial to ensure efficient resource allocation across virtual machines (VMs) and servers. RL is used to dynamically allocate resources based on demand, preventing over-provisioning (which wastes resources) and under-provisioning (which causes delays or failures). - Data Centers:

Data centers often face challenges with load balancing and task scheduling. RL can help automate the process of allocating workloads to servers, optimizing energy consumption, and ensuring that resources are used efficiently across the entire data center. - Robotics and Autonomous Systems:

In robotics, task scheduling plays a key role in determining which tasks the robot should prioritize. RL allows robots to learn from their environment, adjust their behavior in real-time, and handle tasks more efficiently. For example, an autonomous delivery robot may need to decide whether to pick up a package first or deliver an existing one.

V. Challenges and Considerations

While RL is a powerful tool for dynamic task scheduling, it does come with its own set of challenges:

- Training Time:

RL systems require a significant amount of training data to learn optimal strategies. This means that the system may take some time to become proficient at scheduling tasks, especially in complex environments. - Complexity:

Implementing RL for task scheduling can be complex, particularly when dealing with large-scale systems or diverse types of tasks. The algorithm needs to be carefully designed and tested to ensure that it performs as expected in real-world scenarios. - Data Dependency:

The performance of RL models is highly dependent on the quality and quantity of data. Without sufficient data, RL models may struggle to learn optimal strategies, leading to suboptimal task scheduling.

Reinforcement Learning is transforming the way backend systems handle task scheduling. By making systems more adaptable, efficient, and intelligent, RL allows developers to create solutions that can scale, optimize resources, and improve performance over time. So, if you’re looking to level up your backend development, integrating RL into your task scheduling process is a smart move!

f. Machine Learning for Security Enhancement

In the fast-paced digital world, where cyber threats are evolving at lightning speed, ensuring the security of backend systems has become more critical than ever. From protecting sensitive data to preventing unauthorized access, security has always been a priority for any system. But, just as technology advances, so do the tactics of malicious actors. So, how do we keep up? Enter Machine Learning (ML). ML, with its ability to learn from data and predict future threats, is a game-changer in backend security.

In the fast-paced digital world, where cyber threats are evolving at lightning speed, ensuring the security of backend systems has become more critical than ever. From protecting sensitive data to preventing unauthorized access, security has always been a priority for any system. But, just as technology advances, so do the tactics of malicious actors. So, how do we keep up? Enter Machine Learning (ML). ML, with its ability to learn from data and predict future threats, is a game-changer in backend security.

Imagine you have a security system that not only reacts to known threats but also learns from new, previously unseen ones and adapts its defenses in real time. That’s exactly what ML brings to the table. By implementing ML in backend development, businesses can proactively guard against attacks like never before. Let’s explore how ML is revolutionizing backend security and how it can protect your systems from a wide range of security vulnerabilities.

I. Why is Security Important in Backend Development?

Before diving into how ML helps, it’s essential to understand why security is so vital in backend systems. A backend system is like the engine of a car; it’s where everything happens. When users interact with an app, their requests are processed in the backend, and sensitive information (like passwords, payment details, or personal data) often flows through it.

If the backend system is compromised, it could lead to data breaches, loss of user trust, financial losses, or even legal consequences. That’s why safeguarding the backend infrastructure is critical. Traditional security methods, like firewalls or antivirus software, are useful, but they can’t always handle the complexity or unpredictability of modern cyber threats. That’s where ML comes in—its ability to learn, predict, and adapt makes it a powerful tool for reinforcing backend security.

II. How Machine Learning Enhances Backend Security

Machine learning enhances backend security by providing systems with the ability to automatically detect threats, respond to them, and learn from past attacks. Traditional security methods are static, meaning they can only react to known threats based on predefined rules. But ML is dynamic—it adapts and improves over time, making backend systems smarter and more resilient.

Let’s explore some specific ways ML boosts backend security:

III. Machine Learning for Threat Detection

One of the most significant ways ML can enhance backend security is by identifying potential threats before they cause harm. By analyzing system logs, traffic patterns, and user behavior, ML models can detect anomalies or suspicious activities in real time.

- Intrusion Detection Systems (IDS):

Traditional IDS look for known patterns of malicious activity. However, cyberattacks are constantly evolving, and new techniques are always being developed. ML-based IDS goes a step further by using algorithms that learn from past attack data. Over time, these systems get better at detecting novel or previously unseen attacks.Example: Imagine a situation where an attacker is trying to brute-force their way into a system by guessing passwords. An ML-based IDS can learn the typical login behavior of users and recognize when an unusual number of failed login attempts occurs, alerting the system of a potential attack.

- Malware Detection:

Malware can take many forms, such as viruses, worms, or Trojans. ML-powered systems can analyze the behavior of files and processes to determine if they’re exhibiting malicious activity. These systems don’t rely on a list of known threats—they look for patterns in file behavior and use this information to identify malware.Example: Let’s say a backend system is receiving files from external sources. An ML system can analyze the file’s behavior to determine if it is attempting to access sensitive data or propagate itself across the network. If the file exhibits suspicious behavior, the system can block it before it causes any damage.

IV. User Behavior Analytics (UBA) for Threat Prevention

Another powerful application of ML in backend security is User Behavior Analytics (UBA). UBA uses ML to track and analyze user behavior within the system. By establishing a baseline of “normal” user activity, the system can identify deviations that may signal potential security threats, such as unauthorized access or insider threats.

- Anomaly Detection in User Behavior:

ML models can continuously monitor user behavior in the backend, such as login times, access patterns, and frequency of actions. If a user suddenly logs in from a different geographical location or accesses sensitive data they normally don’t, ML can flag this behavior as potentially suspicious.Example: Imagine a backend system where an employee accesses the company’s financial records every day, but one day, they try to access data outside their usual scope, at a time when they normally wouldn’t be working. An ML model could flag this as a potential security risk, allowing the system to automatically block further access or notify security teams.

- Insider Threat Detection:

While external cyberattacks often get the most attention, insider threats—where employees or contractors misuse their access privileges—are just as dangerous. ML can help detect these threats by identifying unusual activity within the system.Example: If an employee suddenly downloads large amounts of sensitive data or accesses systems they don’t usually work with, an ML-powered backend system could immediately flag this behavior and take action to prevent data theft or loss.

V. Automating Security Response with ML

Another key advantage of integrating ML into backend security is the ability to automate security responses. Rather than relying solely on human intervention to react to potential threats, ML systems can autonomously take action based on what they’ve learned about potential risks.

- Automated Blocking of Malicious IPs:

If an ML model detects that a particular IP address is associated with malicious activity, such as multiple failed login attempts or suspicious traffic patterns, it can automatically block that IP address in real-time. - Predictive Security Measures:

ML doesn’t just react to threats—it can also predict potential future threats. By analyzing patterns in data over time, ML systems can forecast where attacks are likely to happen, allowing teams to implement proactive security measures.

VI. Advantages of Machine Learning in Security Enhancement

There are several compelling reasons why integrating ML into backend security is a smart move:

- Real-Time Threat Detection and Response:

ML models can analyze vast amounts of data in real time, allowing them to identify threats and respond before they can do significant damage. Traditional methods may take too long to detect and respond to threats, but ML provides a fast, automated solution. - Improved Accuracy:

Since ML models learn from data, they can improve their accuracy over time. As they process more data, they become better at detecting threats, reducing the chances of false positives and false negatives. - Adaptability to Evolving Threats:

Cyberattacks are always evolving. ML systems are dynamic and can adapt to new types of threats, making them more resilient than traditional security tools that may only recognize known attacks.

VII. Real-World Applications of Machine Learning in Security

Many industries are already leveraging the power of ML to enhance security in their backend systems:

- Financial Institutions:

Banks and financial institutions use ML for fraud detection, monitoring transactions in real time to spot unusual activity that might indicate fraudulent transactions. By analyzing transaction patterns, ML systems can identify potential fraudsters and prevent them from accessing accounts. - Healthcare Industry:

In healthcare, where patient data is highly sensitive, ML models help detect breaches and ensure compliance with privacy regulations such as HIPAA. They can identify anomalous access to patient records, ensuring that only authorized personnel have access. - E-Commerce Platforms:

E-commerce websites often deal with large amounts of sensitive customer data, such as credit card information. ML models are used to monitor and protect customer transactions, flagging any suspicious activities like account takeovers or payment fraud.

VIII. Challenges and Considerations

Despite its potential, using ML for security enhancement is not without challenges. For instance:

- Data Privacy:

Machine learning models require large amounts of data to function effectively. However, handling sensitive data for security purposes must be done with care, ensuring compliance with privacy laws and regulations. - Model Training and Maintenance:

To be effective, ML models must be trained on high-quality data. The process of training models can be time-consuming, and the models need regular updates to keep up with new types of threats. - Complexity:

Integrating ML into security systems requires specialized knowledge and resources. Organizations must ensure they have the expertise to properly deploy and maintain these models.

In conclusion, ML isn’t just a buzzword in backend security—it’s a game-changer. By offering real-time threat detection, predictive capabilities, and automated responses, ML transforms how systems protect themselves against evolving cyber threats. With its ability to continuously learn from data, ML ensures that backend systems can stay one step ahead of attackers, keeping user data safe and secure. So, if you haven’t yet explored how ML can enhance your backend security, now’s the time to start!

g. Automated Machine Learning (AutoML) for Model Deployment

Machine learning (ML) has certainly come a long way in revolutionizing industries and providing businesses with new opportunities to enhance user experiences, streamline operations, and improve security. But as powerful as machine learning is, there’s still one challenge many organizations face: deploying and maintaining machine learning models in a production environment. The good news? Enter Automated Machine Learning (AutoML), the hero in this story, transforming the way models are deployed and maintained.

Machine learning (ML) has certainly come a long way in revolutionizing industries and providing businesses with new opportunities to enhance user experiences, streamline operations, and improve security. But as powerful as machine learning is, there’s still one challenge many organizations face: deploying and maintaining machine learning models in a production environment. The good news? Enter Automated Machine Learning (AutoML), the hero in this story, transforming the way models are deployed and maintained.

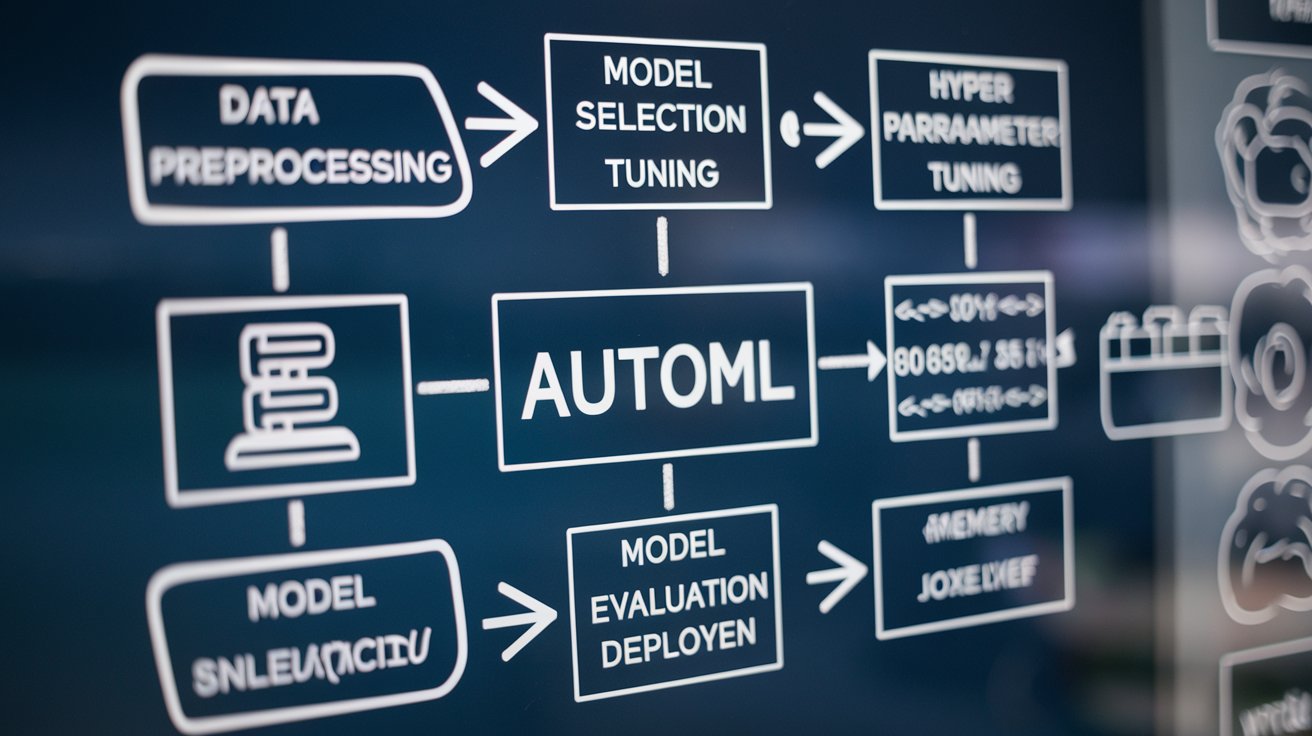

AutoML is a game-changer, allowing businesses to create powerful machine learning models without requiring expert-level knowledge of data science. By automating the time-consuming aspects of model development, like feature selection, model training, and hyperparameter tuning, AutoML enables faster and more efficient model deployment. Let’s dive into how AutoML works and how it is transforming backend development.

I. What is AutoML and Why is It Important?

Before we get into the nitty-gritty, let’s take a step back and explore what AutoML really is. In simple terms, Automated Machine Learning refers to the process of automating the entire workflow of machine learning model development, from data preprocessing to model deployment. The goal is to make machine learning accessible to a wider audience, including those without a strong background in data science.

Historically, deploying machine learning models required a deep understanding of algorithms, data processing, and tuning hyperparameters. This complexity could make it difficult for companies without data science teams to leverage the full potential of machine learning. AutoML solves this problem by automating key aspects of the ML workflow, making it easier for businesses to integrate machine learning into their backend systems.

In backend development, where speed, efficiency, and scalability are crucial, AutoML allows developers to quickly deploy machine learning models that can improve processes such as user behavior prediction, recommendation systems, or anomaly detection. By doing so, AutoML reduces the time and effort required for model deployment while still delivering powerful insights.

II. Key Benefits of AutoML for Backend Development

So why is AutoML such a game-changer? Well, let’s explore some of the major benefits it brings to backend development:

- Faster Model Development and Deployment

The process of building a machine learning model can be lengthy and resource-intensive. With AutoML, many steps—like data preprocessing, model selection, and hyperparameter tuning—are automated. This significantly reduces the time it takes to develop a model, allowing businesses to deploy their models faster. As a result, backend systems can be enhanced with machine learning capabilities in record time.Example: Imagine you’re working on a recommendation system for an e-commerce website. Using AutoML, you can quickly build and deploy a model to personalize product recommendations for users without spending weeks training and fine-tuning the model.

- No Need for Deep Data Science Expertise

AutoML levels the playing field by allowing developers and business analysts—who may not have an extensive background in data science—to create machine learning models. This is particularly beneficial for small businesses or startups that may not have dedicated data science teams but still want to harness the power of machine learning.Example: A developer at a small startup can use AutoML to build a model for dynamic pricing based on market trends and customer behavior. This means the developer can focus on integrating the model into the backend without needing advanced knowledge in machine learning algorithms.

- Improved Accuracy and Optimization

One of the most time-consuming aspects of machine learning is the process of tuning hyperparameters to optimize model performance. AutoML automates this process using techniques like grid search or random search, ensuring the best-performing model is selected for deployment. This results in more accurate models with optimized performance, reducing the risk of errors or mispredictions.Example: For an anomaly detection system in a backend infrastructure, AutoML can test various models and automatically select the one that identifies suspicious activities with the highest accuracy. This saves time while ensuring the model performs well in real-world conditions.

- Reduced Cost and Resource Consumption

Traditional machine learning workflows can be resource-intensive, requiring significant computational power to train multiple models. AutoML systems automate this process, which often leads to more efficient use of resources. With AutoML, backend systems can run machine learning models on a smaller budget, making it accessible for companies of all sizes.Example: A small online platform wants to predict user churn. Using AutoML, the platform can generate a churn prediction model without having to invest in expensive computational resources.

III. How AutoML Works

Now that we understand the benefits of AutoML, let’s break down how it works in backend development. AutoML automates several stages of the machine learning workflow, each of which we’ll cover below:

- Data Preprocessing

Raw data is often messy and incomplete, which can hinder the performance of machine learning models. AutoML takes care of data preprocessing by automatically handling missing values, normalizing data, and transforming features into the most useful format.Example: If you’re building a model to predict sales, AutoML will automatically clean up your sales data by handling missing values and converting categorical variables (like product types) into numerical formats.

- Model Selection

Choosing the right algorithm for the problem at hand can be challenging, even for experienced data scientists. AutoML automatically selects the most appropriate machine learning algorithm based on the type of problem you’re trying to solve—whether it’s regression, classification, or clustering.Example: For a fraud detection model, AutoML might select a classification algorithm like Random Forest or XGBoost, depending on the features of the data.

- Hyperparameter Tuning

Hyperparameters are critical in fine-tuning the performance of a machine learning model. AutoML handles hyperparameter tuning by testing various combinations of parameters to identify the optimal configuration. This saves time compared to manually adjusting parameters.Example: In a model designed to predict customer purchasing behavior, AutoML will experiment with different hyperparameters to optimize the model’s performance and prediction accuracy.

- Model Evaluation